Big data is now a solid reality for many firms in the financial industry. However, along with the traditional three Vs of big data – volume, variety and velocity – financial firms must consider a fourth: vulnerability. Managing big data effectively is an increasingly difficult task and protection of the vast amount of critical information generated is more essential than ever.

The need to secure the storage, transit and use of corporate and personal data across business applications has always been important to financial institutions – and never more so than in the modern age when online banking and electronic communications reign supreme.

The need to secure the storage, transit and use of corporate and personal data across business applications has always been important to financial institutions – and never more so than in the modern age when online banking and electronic communications reign supreme.

More than ever the global nature of financial services makes it necessary to comprehensively address international data security and privacy regulations, writes Daniel Gutierrez for Insidebigdata.com.

Part of what makes financial services so vulnerable is their position as the top target for cybercrime. Security breaches at financial firms invariably attract the most media attention, and when threats do occur, there is much more than financial loss at stake. Customers need to trust their banks with their money, so when banks are affected by fraud and attacks, that hard-earned loyalty and trust diminishes.

However, many financial firms are using big data to detect and prevent fraud, as its use allows for continuous or behavioral authentication. Analysis allows organizations to understand their customers’ activity patterns, share data about attack vendors, and increase the reliance on data to predict attacks based on trends affecting the industry.

However, the vast amount of data required to do this, and the sensitive nature of the financial industry, adds a substantial operational concern for businesses. Specifically, things like the Dodd-Frank Act require firms to maintain records for at least five years, and Sarbanes-Oxley requires firms to maintain audit work papers and required information for at least seven years.

Added to this is the need for these records to be available ‘on demand,’ and often ‘normalized’ and sent to regulators proactively. Maintaining an efficient and large-scale data management infrastructure is key to achieving this and to optimizing firms’ business operations. Using big data solutions, driven by a stable and dependable platform, allows financial services firms to optimize capital leverage while maintaining the reserves required by regulators.

As well as allowing companies to keep up with their compliance commitments, a good platform should unlock the financial firms’ data assets to allow other activities such as market risk modeling. The detailed data can give banks better insight into the material behavior of complex financial instruments. Consumer risk can also be modeled, providing greater insight into capital availability and liquidity.

The far-reaching effects of the economic meltdown in 2008 were largely due to a lack of visibility in consumer actions and groups with related risk profiles. By using the data required by compliance issues to analyze market behavior in this way, hopefully a similar crash can be prevented in the future.

The security principles set forth in industry standard ISO/IEC 27002 provide a framework for effective security, built around the cycle of Plan, Do, Check, and Act (PDCA). Many good security products are on the market, but all are designed to meet specific threats – and will not block other threats. At GRT Corp. our security philosophy is built around these words by noted security expert Dr. Bruce Schneier: “Security is not a product, but a process.”

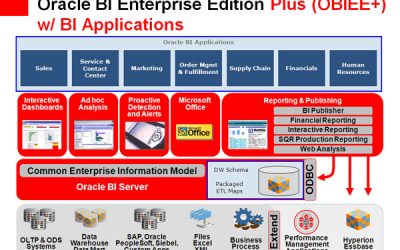

Oracle Looks at BI Trends

Mobile is taking over the Business Intelligence (BI) world. So is the cloud. And Big Data, especially "unstructured"...